Hello all,

This is a follow-up from my previous post: Is it a good idea to purchase refurbished HDDs off Amazon ?

In this post I will give you my experience purchasing refurbished hard drives and upgrading my BTRFS RAID10 arrray by swaping all the 4 drives.

TL;DR: All 4 drives work fine. I was able to replace the drives in my array one at a time using an USB enclosure for the data transfer !

1. Purchasing & Unboxing

After reading the reply from my previous post, I ended up purchasing 4x WD Ultrastar DC HC520 12TB hard drives from Ebay (Germany).

The delivery was pretty fast, I received the package within 2 days.

The drive where very well packed by the seller, in a special styrofoam tray and anti-static bags

2. Sanity check

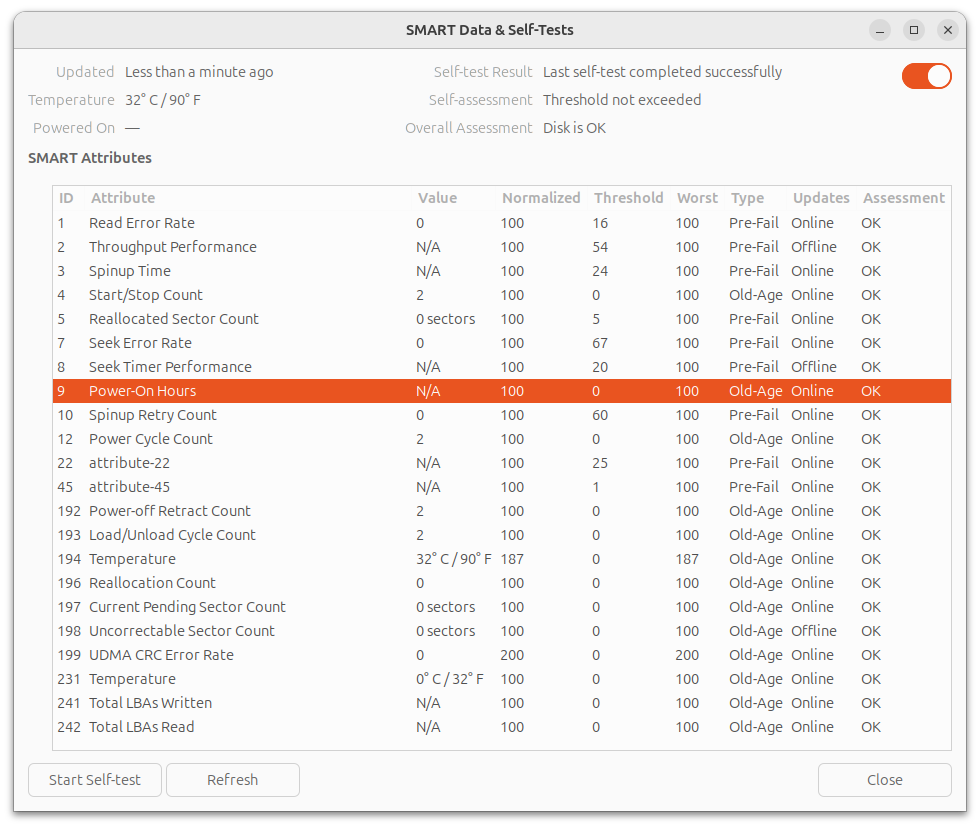

I connect the drives to a spare computer I have and spin-up an Ubuntu Live USB to run a S.MA.R.T check and read the values. SMART checks and data are available from GNOME Disks (gnome-disk-utility), if you don’t want to bother with the terminal.

All the 4 disks passed the self check, I even did a complete check on 2 of them overnight and they both passed without any error.

More surprisingly, all the 4 disks report Power-ON Hours=N/A or 0. I don’t think it means they are brand new, I suspect the values have been erased by the reseller.

3. Backup everything !

I’ve selected one of the 12TB drives and installed it inside an external USB3 enclosure. On my PC I formatted the drive to BTRFS with one partition with the entire capacity of the disk.

I then connected the, now external, drive to the NAS and transfer the entirety of my files (excluding a couple of things I don’t need for sure), using rsync:

rsync -av --progress --exclude 'lost+found' --exclude 'quarantine' --exclude '.snapshots' /mnt/volume1/* /media/Backup_2024-10-12.btrfs --log-file=~/rsync_backup_20241012.log

Actually, I wanted to run the command detached, so I used the at command at (not sure if this is the best method to do this, feel free to propose some alternatives):

echo "rsync -av --progress --exclude 'lost+found' --exclude 'quarantine' --exclude '.snapshots' /mnt/volume1/* /media/Backup_2024-10-12.btrfs --log-file=~/rsync_backup_20241012.log" | at 23:32

The total volume of the data is 7.6TiB, the transfer took 19 hours to complete.

4. Replacing the drives

My RAID10 array, a.k.a volume1 is comprise of the disks sda, sdb, sdc and sdd, all of which are 6TB drives. My NAS has only 4x SATA ports and all of them are occupied (volume2 is an SSD connected via USB3).

m4nas:~:% lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 1 5.5T 0 disk /mnt/volume1

sdb 8:16 1 5.5T 0 disk

sdc 8:32 1 5.5T 0 disk

sdd 8:48 1 5.5T 0 disk

sde 8:64 0 111.8G 0 disk

└─sde1 8:65 0 111.8G 0 part /mnt/volume2

sdf 8:80 0 10.9T 0 disk

mmcblk2 179:0 0 58.2G 0 disk

└─mmcblk2p1 179:1 0 57.6G 0 part /

mmcblk2boot0 179:32 0 4M 1 disk

mmcblk2boot1 179:64 0 4M 1 disk

zram0 252:0 0 1.9G 0 disk [SWAP]

According to documentation I could find (btrfs replace - readthedocs.io, Btrfs, replace a disk - tnonline.net), the best course of action is definitely to use the builtin BTRFS command replace.

From there, there are 2 method I can use:

- Connect new drive, one by one, via USB3 to run

replace, then swap the disks in the drive-bay - Degraded mode, swap the disks one by one in the drive-bays and rebuild the array

Method #1 seems to me faster and safer, and I’ve decided to tried this one first. If it doesn’t work, I can fallback to method #2 (which I had to for one of the disks !).

4.a. Replace the disks one-by-one via USB

I’ve installed a blank 12TB disk in my USB enclosure and mounted it to the NAS. It is showing as sdf.

Now, it’s time to run the replace command as described here: Btrfs, Replacing a disk, Replacing a disk in a RAID array

sudo btrfs replace start 1 /dev/sdf /mnt/volume1

We can see the new disk is shown as ID 0 while the replace operation takes place:

m4nas:~:% btrfs filesystem show

Label: 'volume1' uuid: 543e5c4f-4012-4204-bf28-1e4e651ce2e8

Total devices 4 FS bytes used 7.51TiB

devid 0 size 5.46TiB used 3.77TiB path /dev/sdf

devid 1 size 5.46TiB used 3.77TiB path /dev/sda

devid 2 size 5.46TiB used 3.77TiB path /dev/sdb

devid 3 size 5.46TiB used 3.77TiB path /dev/sdc

devid 4 size 5.46TiB used 3.77TiB path /dev/sdd

Label: 'ssd1' uuid: 0b28580f-4a85-4650-a989-763c53934241

Total devices 1 FS bytes used 46.78GiB

devid 1 size 111.76GiB used 111.76GiB path /dev/sde1

It took around 15 hours to replace the disk. After it’s done, I’ve got this:

m4nas:~:% sudo btrfs replace status /mnt/volume1

Started on 19.Oct 12:22:03, finished on 20.Oct 03:05:48, 0 write errs, 0 uncorr. read errs

m4nas:~:% btrfs filesystem show

Label: 'volume1' uuid: 543e5c4f-4012-4204-bf28-1e4e651ce2e8

Total devices 4 FS bytes used 7.51TiB

devid 1 size 5.46TiB used 3.77TiB path /dev/sdf

devid 2 size 5.46TiB used 3.77TiB path /dev/sdb

devid 3 size 5.46TiB used 3.77TiB path /dev/sdc

devid 4 size 5.46TiB used 3.77TiB path /dev/sdd

Label: 'ssd1' uuid: 0b28580f-4a85-4650-a989-763c53934241

Total devices 1 FS bytes used 15.65GiB

devid 1 size 111.76GiB used 111.76GiB path /dev/sde1

In the end, the swap from USB to SATA worked perfectly !

m4nas:~:% lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 111.8G 0 disk

└─sda1 8:1 0 111.8G 0 part /mnt/volume2

sdb 8:16 1 10.9T 0 disk /mnt/volume1

sdc 8:32 1 5.5T 0 disk

sdd 8:48 1 5.5T 0 disk

sde 8:64 1 5.5T 0 disk

mmcblk2 179:0 0 58.2G 0 disk

└─mmcblk2p1 179:1 0 57.6G 0 part /

mmcblk2boot0 179:32 0 4M 1 disk

mmcblk2boot1 179:64 0 4M 1 disk

zram0 252:0 0 1.9G 0 disk [SWAP]

zram1 252:1 0 50M 0 disk /var/log

m4nas:~:% btrfs filesystem show

Label: 'volume1' uuid: 543e5c4f-4012-4204-bf28-1e4e651ce2e8

Total devices 4 FS bytes used 7.51TiB

devid 1 size 5.46TiB used 3.77TiB path /dev/sdb

devid 2 size 5.46TiB used 3.77TiB path /dev/sdc

devid 3 size 5.46TiB used 3.77TiB path /dev/sdd

devid 4 size 5.46TiB used 3.77TiB path /dev/sde

Label: 'ssd1' uuid: 0b28580f-4a85-4650-a989-763c53934241

Total devices 1 FS bytes used 13.36GiB

devid 1 size 111.76GiB used 89.76GiB path /dev/sda1

Note that I haven’t expended the partition to 12TB yet, I will do this once all the disks are replace.

The replace operation has to be repeated 3 more times, taking great attention each time to select the correct disk ID (2, 3 and 4) and replacement device (e.g: /dev/sdf).

4.b. Issue with replacing disk 2

While replacing disk 2, a problem occurred. The replace operation stopped progressing, despite not reporting any errors. After waiting couple of hours and confirming it was stuck, I decided to do something reckless that cause me a great deal of troubles later: To kick-start the replace operation, I unplugged the power from the USB enclosure and plugged it back in (DO NOT DO THAT !), It seemed to work and the transfer started to progress again. But once completed, the RAID array was broken and the NAS wouldn’t boot anymore. (I will only talk about the things relevant to the disk replacement and will skip all the stupid things I did to make the situation worst, it took me a good 3 days to recover and get back on track…).

I had to forget and remove from the RAID array, both the drive ID=2 (the drive getting replaced) and ID=0 (the ‘new’ drive) in order to mount the array in degraded mode and start over the replace operation with the method #2. In the end it worked, and the 12TB drive is fully functional. I suppose the USB enclosure is not the most reliable, but the next 2 replacement worked just find like the first one.

What I should have done: abort the replace operation, and start over.

4.c. Extend volume to complete drives

Now that all 4 of my drives are upgraded to 12TB in my RAID array, I extend the filesystem to use all of the available space:

sudo btrfs filesystem resize 1:max /mnt/volume1

sudo btrfs filesystem resize 2:max /mnt/volume1

sudo btrfs filesystem resize 3:max /mnt/volume1

sudo btrfs filesystem resize 4:max /mnt/volume1

5. Always keep a full backup !

Earlier, I mentioned using one of the ‘new’ 12TB drive as a backup of my data. Before I use it in the NAS, and therefore erase this backup, I assembled 2 of the old drives into my spare computer and once again did a full copy of my NAS data using rsync over the network. This took a long while again, but I wouldn’t skip this step !

6. Conclusion: what did I learn ?

- Buying and using refurbished drives was very easy and the savings are great ! I saved approximately 40% compared to the new price. Only time will tell if this was a good deal. I hope to get at least 4 more years out of these drives. That’s my goal at least…

- Replacing HDDs via a USB3 enclosure is possible with BTRFS, it works 3 time out of 4 ! 😭

- Serial debug is my new best friend ! This part, I didn’t detail in this post. Let’s say my NAS is somewhat exotic NanoPi M4V2, I couldn’t have unborked my system without a functioning UART adapter, and the one I already had in hand didn’t work correctly. I had to buy a new one. And all the things I did (blindly) to try fixing my system were pointless and wrong.

I hope this post can be useful to someone in the future, or at least was interesting to some of you !

awesome write up!

Just did a raid 10 off cheap 12tb datacenter drives myself. sure i had to return 3 to get a working set plus a spare. but thats why you take the time to check them. ALWAYS test your used drives. the resellers churn through batches of these things in the hundreds. sometimes you get lucky and they all work, sometimes half your order just got pulled from a bad batch and you spend a half hour getting them exchanged.

I guess I got lucky with this batch, they all seem to work perfectly. But only time will tell if this what truly a good deal.

thats fair, it is a wait and see kinda gamble. all my working drives including replacements had about 2.5yrs run on them. the bad batch was all 3.5 yr range. one never powered on, the others dropped within 24 hours. that extra year age could be less the cause itself, as likely they were pulled from the same datacenter and the issue with the drives was more how they were treated at that datacenter.

usecase matters too. this raid with used drives is my media server and uptime was a factor. my nextcloud is a pair of new 8tb drives plus an rsync to a backup. which i could afford to do by going used where i can. (and before my selfhosting friends here boo nextcloud, its the only web ui my elderly parents could use on their own. so calm down ya clowns)

My media collection is not backed-up, expect for the spare disks I have now. My photos and documents are encrypted weekly and sent to pCloud. They are also synchronized to my computer and phone with Syncthing. This way the important files are protected by 3-2-1.

Nice! syncthing look pretty great when i played with it. my new raid replaced a jbod and box of salvaged laptop disks as it’s archive. got 6 people using my plex server and telling them downtime would be far less frequent was a nice distraction in the group text from “also i’m movin to jellyfin this summer”.

I love jellyfin, it’s great ! I haven’t played with Plex much to be honest, so I can’t really compare

jellyfin means you can skip plex, its the same thing. let me see if i can anger some fellow olds. jellyfin is the opensource community giving plex the middle finger it deserves. plex having forked from xbmc an eon ago to be the mac version of xbmc, but then it became a company. i paid for a lifetime plex membership like 10 yrs ago, so i’ve just been lazy about switching.

@synapse1278 I recently bought a used 8tb drive off ebay for $45. Manufacturing date on the drive was 2016, but Power_On_Hours was 4 (four!). I asked the seller if they reset SMART data; his response:

"We do not reset power on hours, as we could get in a ton of trouble listing used drives as new bulk etc after altering the power on hours. This one likely was used in/for testing an array etc. "

So I’m pretty pleased with that…

I’d be really curious about where is came from. Was it just in a warehouse somewhere?

I left a comment to the Ebay seller and asked the same question, but I did not get a reply.

Just wanted to drop an amazing work compliment!

Thank you

Good write up. Thanks for the good lessons learned section.

Tmux is your friend for running stuff disconnected. And I agree with the other post about btrfs send/receive.

using rsync:

why not

btrfs send | btrfs receive? is there some advantage to rsync?did you hotswap the drives after each

btrfs replaceor shutdown and then swap?what’s your host OS and do the drives spin down if inactive?

thanks for the writeup!

why not btrfs send | btrfs receive? is there some advantage to rsync?

I didn’t think of this. I am familiar with

rsync, I went with it without searching for alternatives.did you hotswap the drives after each btrfs replace or shutdown and then swap?

I did the swap with the system powered down. I don’t know if my the NanoPi + SATA hat support hotswap.

what’s your host OS and do the drives spin down if inactive?

The NAS runs Armbian. The disks are configured to spin down, yes. I don’t know if this caused me the issue while replacing disk 2. I suppose not, since during replace the disks are all reading continuously. But I don’t know for sure.

Edit: fixed copy-past mistake with quoted sentences

Btw it looks like you accidentally quoted the same sentence twice.

Ah yes ! I just fixed that. Thanks.

I use a refurbished 10tb HDD for my server too and it works just fine

that’s your NAS box?

The black box with white front and blue LED lights, yes. I designed and built it myself. The case is made of laser-cuted plexiglass and 3D printed parts. The front plate is PLA, internals are PETG. It’s build around an ARM Single-Board Computer: NanoPi M4V2 with a SATA extension hat.

nice work it looks awesome!

Thank you ! It was a cool design project, but I ended up no publishing the design as it is quite difficult to assemble and work with. here are a couple more photos:

selling these perchance?

No. I initially thought of publishing the files. But there are some issues with the design I didn’t fix and the whole thing is built around the NanoPi M4V2, which I don’t think you can buy anymore.

I’ve used these kinds of disks in my NAS at least since 2017 with no issues. Just test thoroughly before using. I usually do SMART short and long tests, and then a bit by bit write and read of the entire disk. I’ve caught a couple that failed right away. If it passes that, then it’s usually good for years.

What do you use for the bit-by-bit test and how long does it take (depending on disk size) ? I have read about badblocks and that it could take an entire week to test one drive of 12TB, I didn’t have the patient to do that. The long SMART test already took nearly 20 hours in my case.

I just use dd. It can take days, but it’s worth it.

I also have a dd reading my NAS once a month. It catches bad sectors and forces issues into the open.

Probably writing to the whole disk with something like dd and then testing if they can read it.

I personally wouldn’t go for used drives. However, if you do make sure to get it from a reliable source that offers a warranty. Be ready to replace the disk when the warranty ends.

Be ready to replace the disk when the warranty ends.

What’s the point of replacing them? The warranty doesn’t keep them from dying, it just means you get a free replacement. The amount of life left on the drives after the warranty expires depends heavily on how they’re used, and most self hosters are pretty gentle on their drives. I could see replacing the drives that are heavily used, but replacing all drives just because their warranty expired seems like a waste of money and effort.

I heard some advices with “burning them in” by running a stress test over the span of some days with random IO. If they survive, great. If they die, they will be returned.

The seller mentions the drives are fully tested, but does not offer a warranty, aside from the Ebay 30-day return policy.

Yeah I would want at least 2 years

There is nothing to refurbish in drives. They are just second hand devices. You can check if they are fine pretty easy and you need to take a look at the age (power on hours). I replace drives at 50k-60k hours, no matter if they are fine.

I have 4 6Tb HDDs which I got new from work: been running 24/7 for the last 6 years.

The 4Tb drives they replaced where running for the previous 10 years (bought new as well) and are still in a drawer, got replaced only for the opportunity to upgrade to 6tb.

If your refurbished last only 4 years, to be that is not a positive gain, you lost money. New drives should last 10 years in my personal (and debatable) experience. But I think those drives you bought will last more than 4 years.

I’m finding 8 years to be pretty realistic for when I have drive failures, and I did the math when I was buying drives and came to the same conclusion about buying used.

For example, I’m using 16tb drives, and for the Exos ones I’m using, a new drive is like $300 and the used pricing seems to be $180.

If you assume the used drive is 3 years old, and that the expected lifespan is 8 years, then the used drive is very slightly cheaper than the new one.

But the ‘very slight’ is literally just about a dollar-per-year less ($36/drive/year for used and $37.50/drive/year for new), which doesn’t really feel like it’s worth dealing with essentially unwarrantied, unknown, used and possibly abused drives.

You could of course get very lucky and get more than 8 years out of the used, or the new one could fail earlier or whatever but, statistically, they’re more or less equally likely to happen to the drives so I didn’t really bother with factoring in those scenarios.

And, frankly, at 8 years it’s time to yank the drives and replace them anyways because you’re so far down the bathtub curve it’s more like a slip n’ slide of death at that point.

I have been far luckier than that all my life, I never had a drive fail on me, and I keep them a looong time.

Maybe once, 20 years ago, a drive failed? Can’t really remember, but probably it did happen. Being on a Linux software RAID 1, just remove and plug a new drive, run an attach command and forget.

“Old age” disks!

Hahaha. That’s hilarious.

Just like society labeling.

How is the noise?

Did you make any model selection based on that?In a previous post, some were recommending me to use helium-sealed drives for lower noise. The disks I’ve bought are helium-sealed, but they are definitely a little louder at 7200rpm than 5400rpm drives. It’s still acceptable.

Yeah, good advice.

For my parents house with NAS in their living room I still use WD Red Plus (not Red Pro), so pleasant & quiet (which was basically my only concern). But smol. Can’t find new ones as quiet tho.

Removed by mod

I have a Synology NAS and it makes it all pretty easy. To replace/upgrade a drive you basically just take one out and put a new one in. Then just wait for it to finish and then move on to the next drive. Last time I upgraded my 4 drives took a couple days but was painless. Raid is great.

Removed by mod